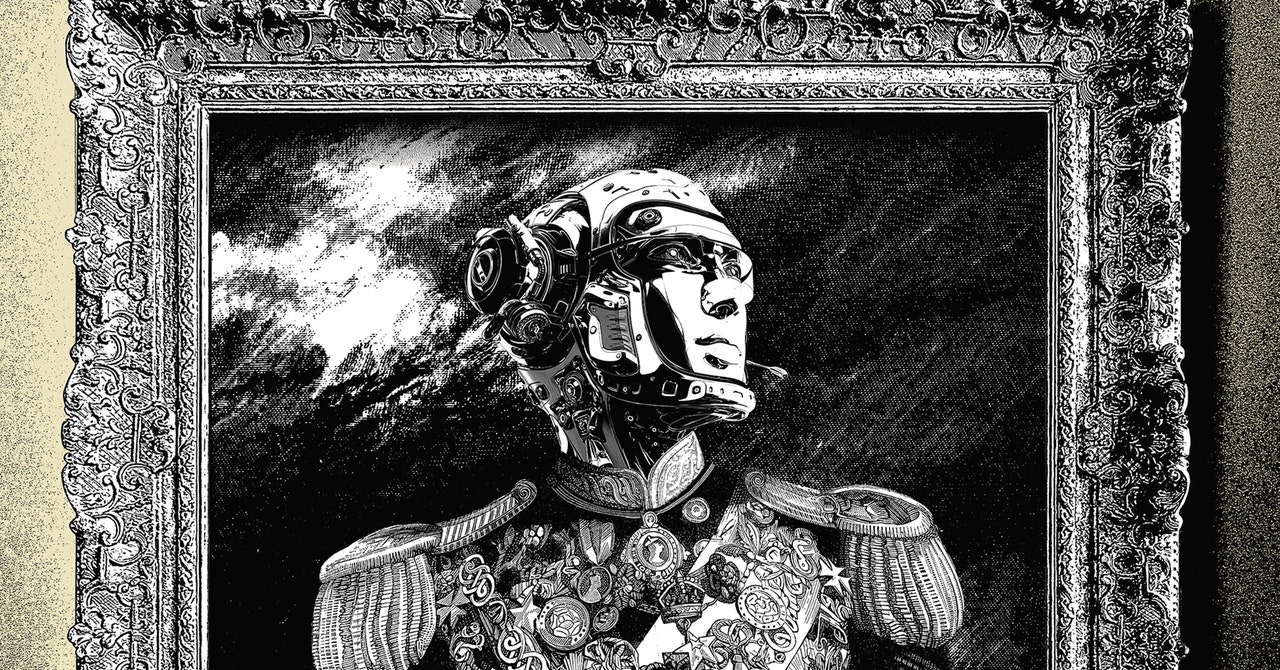

The San Francisco was going to be a killer robot

Why it is Okay to Give a Robot the Right to Kill Humans on the Streets of San Francisco? A Prologue to the December 5 Reversal

A week is a long time in politics—particularly when considering whether it’s okay to grant robots the right to kill humans on the streets of San Francisco.

“There are a whole lot of reasons why it’s a bad idea to arm robots,” says Peter Asaro, an associate professor at The New School in New York who researches the automation of policing. He believes the decision is part of a broader movement to militarize the police. There is a chance that it can be useful in a hostage situation, but there is also a chance that it would be used for other purposes. “That’s detrimental to the public, and particularly communities of color and poor communities.”

The reversal is in part thanks to the huge public outcry and lobbying that resulted from the initial approval. People were worried that removing humans from certain issues relating to life and death would be a step too far. On December 5, a protest took place outside San Francisco City Hall, while at least one supervisor who initially approved the decision later said they regretted their choice.

The supervisor in San Francisco’s fourth district voted for the policy despite his “deep concerns” about it. “I regret it. I’ve grown increasingly uncomfortable with our vote & the precedent it sets for other cities without as strong a commitment to police accountability. I do not think making state violence more remote, distanced, & less human is a step forward.”

The WIRED Project: War, Security, and the War of Crime in the 21st Century – An Overview of Recent Trends in Ukranian Warfare

It will no longer be possible to sneak up on a country with an army, navy, or air force, or to hide what they are doing. Armed forces around the world will try to counter this by assembling, moving from home bases, and maneuvering on the front lines in more dispersed ways, hiding as much as possible in plain sight. There is a fleet of vans that move small amounts of heavy infantry rounds on a variety of routes from West to East in northern Ukranian.

The story is from the annual trends reporting of WIRED. Read more stories from the series here—or download or order a copy of the magazine.

The Ukrainians have deployed Turkish Bayraktar teleoperated weapons against tanks and other targets since the early days of the war. The weapons cost around US$5 million each and are more vulnerable and less effective than Shaheds. In small-scale naval operations, remote piloted boats have been used and commercial, remote-controlled drones have been adapted to drop grenades. Teleoperation becomes more difficult as jamming systems become the norm.

The nature of war will always be about killing people and breaking their stuff quicker than you can. It will still be a challenge of wills, an aspect of the human condition that is far from being completely eradicated for its irrationality. Reason, emotion and chance are still present in the outcome. Technology only changes how we fight, not why.

Tech Company, Artificial Intelligence, and the War on the Internet of Things (Technology & Science in the Military), a Memorandum of Eric Schmidt

In 2001 the search engine was barely making any money and it’s then CEO, EricSchmidt, became a part of it. He stepped away from Alphabet in 2017 after building a sprawling, highly profitable company with a stacked portfolio of projects, including cutting-edge artificial intelligence, self-driving cars, and quantum computers.

Artificial intelligence is mostly being created in the private sector. The best tools that could prove critical to the military, such as algorithms capable of identifying enemy hardware or specific individuals in video, or that can learn superhuman strategies, are built at companies like Google, Amazon, and Apple or inside startups.

What would amount to an enormous overhaul of the most Powerful military operation on earth was outlined in a previous speech by Mr. Schmidt. We would start a tech company. He goes on to sketch out a vision of the internet of things with a deadly twist. “It would build a large number of inexpensive devices that were highly mobile, that were attritable, and those devices—or drones—would have sensors or weapons, and they would be networked together.”

Instead of blocking progress in Geneva, countries should engage with the scientific community to develop the technical and legal measures that could make a ban on autonomous weapons verifiable and enforceable. Technical questions are related to technology. What physical parameters should be used to define the lower limit for weapons? How should producer and sale be managed, and what are the primitive platforms that can be scaled to full autonomy? Should design constraints be used, such as requiring a ‘recall’ signal? Can firing circuits be separated physically from on-board computation, to prevent human-piloted weapons from being converted easily into autonomous weapons? Can verifiable protocols be designed to prevent accidental escalation of hostilities between autonomous systems?

A few nations already have weapons that operate without direct human control in limited circumstances, such as missile defenses that need to respond at superhuman speed to be effective. Greater use of AI might mean more scenarios where systems act autonomously, for example when drones are operating out of communications range or in swarms too complex for any human to manage.

Weapons already used in the conflict begin the road to full autonomy. For example, Russia is deploying ‘smart’ cruise missiles to harsh effect, hitting predefined targets such as administrative buildings and energy installations. The Ukrainians like to call the Iranian Shahed missiles mopeds because they can fly low along rivers to avoid detection, and circle an area while waiting for instructions. Key to these attacks is the use of swarms of missiles to overwhelm air-defence systems, along with minimal radio links to avoid detection. I’ve heard that new Shaheds are being equipped with the ability to home in on nearby heat sources without the need for controllers to update them, which would be an important step towards full autonomy.

There have been lethal autonomous weapons available for several years. For example, since 2017, a government-owned manufacturer in Turkey (STM) has been selling the Kargu drone, which is the size of a dinner plate and carries 1 kilogram of explosive. The company claimed on its website that the drones can autonomously and deliberately hit vehicles and people, with targets selected on images and by tracking moving targets. The use of drones by the Government of National Accord of Libya to hunt down retreating forces is reported by the UN.

There is confusion, real or feigned, about technical issues, which is the reason that negotiations under the CCW have made little progress. The meaning of the wordautonomous is still debated by countries. Absurdly, for example, Germany declared that a weapon is autonomous only if it has “the ability to learn and develop self-awareness”. China says that as weapons become capable of distinguishing between civilians and soldiers, they won’t be banned. The United Kingdom has changed its pledge to never develop or use lethal weapons so as to make it meaningless. For example, in 2011, the UK Ministry of Defence wrote that “a degree of autonomous operation is probably achievable now”, but in 2017 stated that “an autonomous system is capable of understanding higher-level intent”. Michael Fallon, then secretary of state for defence, wrote in 2016 that “fully autonomous systems do not yet exist and are not likely to do so for many years, if at all”, and concluded that “it is too soon to ban something we simply cannot define” (see go.nature.com/3xrztn6).

The case depends on two claims. It’s questionable as to whether Artificial Intelligence is less likely to make mistakes than humans. And second, that autonomous weapons will be used in essentially the same scenarios as human-controlled weapons such as rifles, tanks and Predator drones. This seems to me to be false. If autonomously weapons are used more often, by different parties with differing goals and in less clear-cut settings such as insurrections, the advantage of distinguishing civilians from soldiers is irrelevant. I think the emphasis on weapons’ claimed superiority in differentiating civilians from fighters has been misguided by the UN report pointing to risks of misidentification.

In my view — and I suspect that of most people on Earth — the best solution is simply to ban lethal autonomous weapons, perhaps through a process initiated by the UN General Assembly. Another possibility, suggested as a compromise measure by a group of experts (see go.nature.com/3jugzxy) and formally proposed to the international community by the International Committee of the Red Cross (see go.nature.com/3k3tpan), would ban anti-personnel autonomous weapons. Like the St Petersburg Declaration of 1868, which prohibited exploding ordnance lighter than 400 grams, such a treaty could place lower limits on the size and payload of weapons, making it impossible to deploy vast swarms of small devices that function as weapons of mass destruction.

How to build confidence in a Nuclear-Weapon-Free Zone: Recent Developments in the Organization for the Prohibition of Chemical Weapons

Further progress in Geneva soon is unlikely. The United States and Russia refuse to allow negotiations on a legally binding agreement. The United States worries that a treaty would be unverifiable, leading other parties to circumvent a ban and creating a risk of strategic surprise. Russia objects to being ignored because of its actions in Ukraine.

Rather than blocking negotiations, it would be better for the United States and others to focus on devising practical measures to build confidence in adherence. These could include inspection agreements, design constraints that deter conversion to full autonomy, rules requiring industrial suppliers to check the bona fides of customers , and so on. It would make sense to discuss the remit of an AI version of the Organization for the Prohibition of Chemical Weapons, which has devised similar technical measures to implement the Chemical Weapons Convention. These measures have neither overburdened the chemical industry nor curtailed chemistry research. Similarly, the New START treaty between the United States and Russia allows 18 on-site inspections of nuclear-weapons facilities each year. The Comprehensive Nuclear-Test-Ban Treaty might never have succeeded if not for scientists from all sides working together to develop the International Monitoring System.

The meeting of Latin American and Caribbean nations is going to be held in Costa Rica, where threats from non-state actors will be on the agenda. These same nations organized the first nuclear-weapon-free zone, raising hopes that they might also initiate a treaty declaring an autonomous-weapon-free zone.

The new US declaration is a potential building block for more responsible uses of military artificial intelligence around the world.