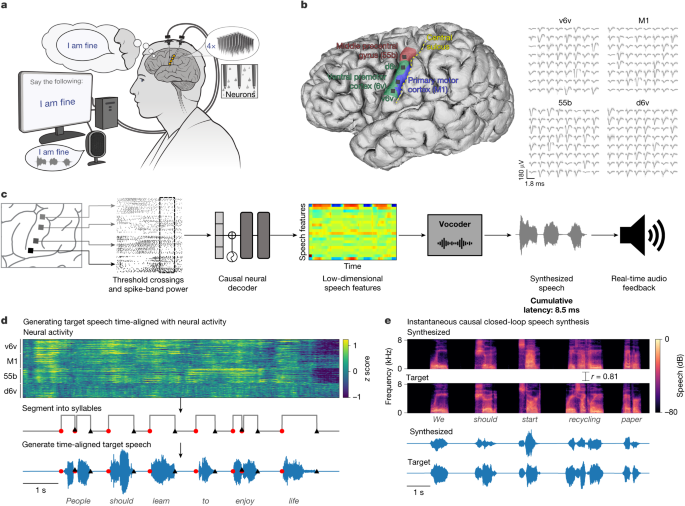

A voice synthesis

World first: brain implant lets man speak with expression and sing almost instantly, according to a study of a 45-year-old man with severe speech disability

A man with a severe speech disability is able to speak expressively and sing using a brain implant that translates his neural activity into words almost instantly. The device conveys changes of tone when he asks questions, emphasizes the words of his choice and allows him to hum a string of notes in three pitches.

“This is the holy grail in speech BCIs,” says Christian Herff, a computational neuroscientist at Maastricht University, the Netherlands, who was not involved in the study. This is real, continuous speech.

The study participant, a 45-year-old man, lost his ability to speak clearly after developing amyotrophic lateral sclerosis, a form of motor neuron disease, which damages the nerves that control muscle movements, including those needed for speech. Although he could still make sounds and mouth words, his speech was slow and unclear.

Source: World first: brain implant lets man speak with expression ― and sing

The Effect of Neural Activity and Biosignal on Speech Synthesis. An Empirical Study of Human Listening and Acoustic Contamination

“We don’t always use words to communicate what we want. We have interjections. We have other expressive vocalizations that are not in the vocabulary,” explains Wairagkar. “In order to do that, we have adopted this approach, which is completely unrestricted.”

The team also personalized the synthetic voice to sound like the man’s own, by training AI algorithms on recordings of interviews he had done before the onset of his disease.

Three example trials have an audio recording, a sound recording, and a sound recording with two neural electrodes. There are some examples for three types of speech tasks. Even the top two most correlated neural electrodes cannot be observed with the regularity that the audio spectrogram can. Speech preparation can be preceded by an increase in neural activity and arguments against acoustic pollution can be made. The last word of the emphasis example, which is not fully vocalized, has an increase in neural activity that is similar to other words. Contamination matrices and statistical criteria are shown in the bottom row, where P-value indicates whether the trial is significantly acoustically contaminated or not. b. An example trial of speech with simultaneous recording of neural signals and bio signals using a microphone and IMU sensors. Three biosignals were used to train independent decoders to synthesise speech. c. Speech could not be made from biosignals measuring movements and sound. (Left) Cross-validated Pearson correlation coefficients (mean ± s.d.) (compared to target speech) of speech synthesized using neural signals, each of the biosignals, and all biosignals together. Neural activity is far more accurate for decoding speech than for reconstructing it. (Right) Distribution of Pearson correlation coefficients of speech decoding from biosignals and neural signals are mostly non-overlapping, indicating that synthesis quality from biosignals is much lower than that of neural signals. d. To assess the intelligibility of voice synthesis from neural activity and biosignals (stethoscopic mic decoder), naive human listeners performed open transcription of (the same) 30 synthesized trials using both the decoders. Median phoneme error rates and word error rates for neural decoding were significantly lower (43.60%) than decoding stethoscope recordings, which had word error rate of 100%. This indicates that intelligible speech cannot be decoded from these non-neural biosignals.