The race for new approaches to assessing Artificial Intelligence is on after the Turing test was broken

The ARC is not a language-only bot: How good can GPT-4 be for Cognition and Rational Reasoning?

“We showed that the machines are still not able to get anywhere near the level of humans,” says Mitchell. She says that it was surprising that it was able to solve some of the problems.

TheARC was organized in 2020 by a company called Chollet. The bot that won the contest was specifically trained to solve ARC-like tasks but had no general capabilities; it got only 21% of the problems right. The people solve 80% of the time. Several teams of researchers have now used the ARC to test the capabilities of LLMs; none has come close to human performance.

Sam Acquaviva is a Computational Cognitive scientist at the Massachusetts Institute of Technology. “I would be shocked,” he says. He notes that another team of researchers has tested GPT-4 on a benchmark called 1D-ARC, in which patterns are confined to a single row rather than being in a grid8. That should erase some of the unfairness, he says. The LLM was not able to grasp the underlying rule and reasoning due to the performance improvement of GPT-4.

It was difficult for GPT-4 due to the limitations in the way the test is done. The publicly available version of the LLM can accept only text as an input, so the researchers gave GPT-4 arrays of numbers that represented the images. A blank row is 0 and a colourful square is a number. Humans simply saw the images. “We are comparing a language-only system with humans, who have a highly developed visual system,” says Mitchell. “So it might not be a totally fair comparison.”

OpenAI has created a ‘multimodal’ version of GPT-4 that can accept images as input. Mitchell doesn’t think the multimodal GPT-4 would do any better, so she is waiting for that to be publicly available before they test ConceptARC on it. “I don’t think these systems have the same kind of abstract concepts and reasoning abilities that people have,” she says.

The LLM is not just anthropology, but it is thinking like us, as we are thinking under the covers: A case study on GPT-4 in partnership with ConceptARC

Mitchell says the report doesn’t explore the LLM’s capabilities in a systematic way. “It’s more like anthropology,” she says. To prove that a machine has theory of mind, he would need evidence of a human-like cognitive process, not just that the machine can output the same answers as a person.

On the flip side, LLMs also have capabilities that people don’t — such as the ability to know the connections between almost every word humans have ever written. This might allow the models to solve problems by relying on quirks of language or other indicators, without necessarily generalizing to wider performance, says Mitchell.

Wortham offers advice to anyone trying to understand AI systems — avoid what he calls the curse of anthropomorphization. He says we exaggerate anything that appears to demonstrate intelligence.

It is a curse because it is impossible to think of things that do not display goal-oriented behavior or use human models. “And we’re imagining that the reason it’s doing that is because it’s thinking like us, under the covers.”

The researchers fed the ConceptARC tasks to GPT-4 and to 400 people enlisted online. The humans received an average score of more than 85% on all concept groups, but GPT-4 only got a meager 33% on one group and less than 30% on the rest.

ConceptARC: A Challenge to Human Intelligence to Break the Classification Sense of the Universe and the Distinction It Exhibits

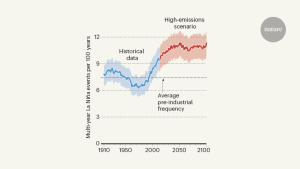

To test the concept of sameness, one puzzle needs to keep objects aligned along the same axis and another requires the solvers to keep objects in a certain pattern. The goal of this was to reduce the chances that an AI system could pass the test without grasping the concepts (see ‘An abstract-thinking test that defeats machines’).

Mitchell and her colleagues were able to make a set of fresh puzzles that were inspired by some of the more well-known pieces of literature. The ConceptARC tests are easier: Mitchell’s team wanted to ensure the benchmark would not miss progress in machines’ capabilities, even if small. One of the differences was that the team chose certain concepts to test and then created a bunch of puzzles for each of them that were variations on a theme.

Lake says that the ability to apply everyday knowledge to previously unseen problems is a hallmark of human intelligence.

Bubeck explains that GPT-4 does not think like a person and for any capabilities that it displays, it achieved it in its own way.

Language detection and cognition in the Loebner Prize and LLMs: Who is reading it? What can it teach us about artificial intelligence?

But the idea of leveraging language to detect whether a machine is capable of thought endured. For several decades, the businessman and philanthropist Hugh Loebner funded an annual Turing test event known as the Loebner Prize. Human judges attempted to guess which machine or person it was that they engaged in text-based dialogues with. Rob Wortham says that the yearly gatherings stopped after the death of Loebner because the money for it ran out. There was a competition hosted on Loebner’s behalf by the UK Society for the Study of Artificial Intelligence and Simulation of Behaviour. He says that LLMs would now stand a good chance of fooling humans in such a contest; it’s a coincidence that the events ended shortly before LLMs really took off.

According to Nick Ryder, the performance of a test may not correlate with a person’s score on the same test. “I don’t think that one should look at an evaluation of a human and a large language model and derive any amount of equivalence,” he says. The OpenAI scores are “not meant to be a statement of human-like capability or human-like reasoning. It is meant to be a statement of how the model performs.

There is a deeper problem in interpreting what the benchmarks mean. A person’s high scores on the exams would be a sign of their general intelligence though, as long as it’s not a fuzzy concept, according to one definition. A person who can do well at the exams is often assumed to do well at other cognitive tests and has grasped certain abstract concepts. But that is not at all the case for LLMs, says Mitchell; these work in a very different way from people. “Extrapolating in the way that we extrapolate for humans won’t always work for AI systems,” she says.

The key, he says, is to take the LLM outside of its comfort zone. He suggests presenting it with scenarios that are different from what the LLM has seen before. In many cases, the LLM answers by saying things that are most likely to be connected with the original question, rather than giving the correct answer to the new scenario.

It looked at the questions and training data to see if they had the same strings of words. When it tested the LLMs before and after removing the similar strings, there was little difference in performance, suggesting that successes couldn’t be attributed largely to contamination. However, some researchers have questioned whether this test is stringent enough.

Mitchell says that a lot of language models can do well. “But often, the conclusion is not that they have surpassed humans in these general capacities, but that the benchmarks are limited.” Modelling on so much text can make it hard for models to figure out if a question is correct, as they can’t see how similar it is in training data. This issue is known as contamination.

Source: https://www.nature.com/articles/d41586-023-02361-7

When is GPT-4 a Test of Artificial Intelligence for LLM Detection? — A Think Experiment by Chollet

It is a kind of game that researchers familiar with LLMs can probably still win. By taking advantage of the weaknesses of the systems, he would be able to detect an LLM. If you ask me if I am talking to an LLM right now, would I want to? I would definitely be able to tell you,” says Chollet.

Some are not sure if using a test for computer science is a good idea. It is all about making the jury believe that you are lying. The test encourages chatbot developers to use an artificial intelligence to perform tricks, rather than develop useful or interesting capabilities.

When GPT-4 was released in March this year, the firm behind it — OpenAI in San Francisco, California — tested its performance on a series of benchmarks designed for machines, including reading comprehension, mathematics and coding. Most of them were aced by GPT-4. The GPT-4 contains several subject-specific tests designed for US high- school students known as Advanced Placement and a standard test used in the selection process for US graduate studies. GPT-4 attained a score that placed it in the top 10% of people, OpenAI reported, and was achieved in part because of the Uniform Bar Exam.

According to other researchers, GPT-4 and other LLMs will probably pass the Turing test, in that they can fool a lot of people, at least for short conversations. More than 1.5 million people played a game that was based on the Turing test, according to researchers at the company. Players were assigned to chat for two minutes, either to another player or to an LLM-powered bot that the researchers had prompted to behave like a person. The players correctly identified about 40% of the time, which is not great, according to researchers.

Turing did not specify many details about the scenario, notes Mitchell, so there is no exact rubric to follow. “It was not meant as a literal test that you would actually run on the machine — it was more like a thought experiment,” says François Chollet, a software engineer at Google who is based in Seattle, Washington.

Can Machines Think? A Logic Puzzle Analysis of Many Language Models and Bots, with Applications to Text-Based Artificial Intelligence

The world’s best artificial intelligence systems pass tough exams, write well in essays and chat so well that people find their output indistinguishable from their own. What can they do? Solve simple visual logic puzzles.

“There’s very good smart people on all sides of this debate,” says Ullman. He says there is no conclusive evidence supporting either opinion which is the reason for the split. It’s not possible to point at something and say “beep beep”, says Ullman.

In the past two to three years, LLMs have blown previous AI systems out of the water in terms of their ability across multiple tasks. The work they do is done by using a statistical correlation between the words in online sentences to generate plausible next words when given an input text. There is more to a chatbot built on LLMs: human trainers give feedback on how the bot responds.

The team behind the logic puzzles aims to provide a better benchmark for testing the capabilities of AI systems — and to help address a conundrum about large language models (LLMs) such as GPT-4. They breeze through what once were considered landmark feats of machine intelligence. They seem less impressive after being tested another way.

“I propose to consider the question, ‘Can machines think?” A seminal 1950 paper was written by British mathematician and computing and mathematical genius Alan Turing.

Untangling the Mechanisms that Make up the Behaviours of Large Molecule Molecular Medicine (LLMs)

Confidence in a medicine doesn’t just come from observed safety and efficacy in clinical trials, however. Understanding the mechanism that causes its behaviour is also important, allowing researchers to predict how it will function in different contexts. It’s necessary to untangle the mechanisms that make up the behaviours of LLMs for similar reasons.